I have been building software for nearly 20 years and keep stumbling upon the idea that software is fractal. It is like a nagging feeling that I have been unable to concretise. This post is a (probably poor) attempt at it and why it matters.

What does “fractal” mean?

Let’s ask ChatGPT, the source of all modern knowledge.

A fractal is a complex shape that looks similar at any scale. If you zoom in on a part of a fractal, the shape you see is similar to the whole. Fractals are often self-similar and infinitely detailed. They can be found in nature in patterns like snowflakes, coastlines, and leaf arrangements.

ChatGTP, 2024

In other words, fractal structures exhibit similar shapes (and, potentially, behaviour) at different scale levels (i.e., zooming in and out). Thus, one can use a similar set of concepts and rules to understand them regardless of where in the scale you focus.

Examples of software “fractalism“

What better way to prove that software is fractal than with examples. Pretty much all software systems are a combination of the following components:

- Code, which captures desired behaviour.

- Messages, which represent communication between code components.

- Load Distribution, which guarantees the best possible performance.

Example 1 – Code

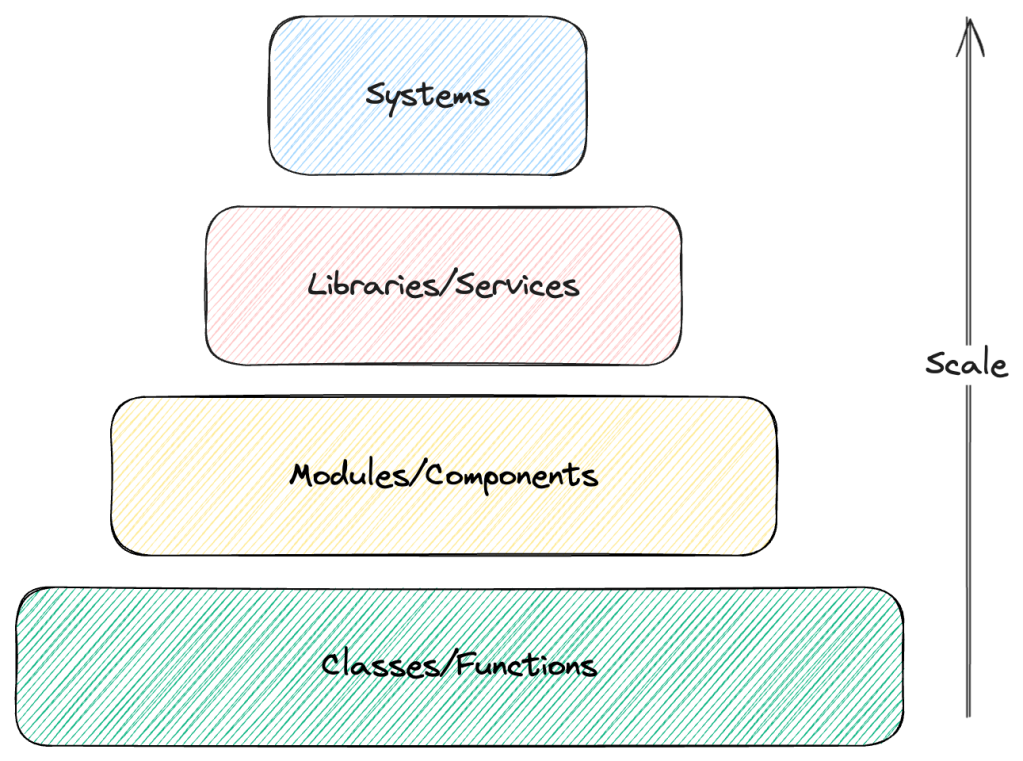

First, let’s look at how we capture “behaviour”, i.e., code that executes what we want the software to do. While not the lowest level, a reasonable low level would be classes and functions capturing code written by developers according to specifications that capture the desired behaviour.

Every class or function offers a series of “promises” (à la Promise Theory) about what it can do. Classes and functions use other classes and functions to compose higher-order behaviours. Modules and components aggregate classes and functions, producing new promises for more complex behaviours.

Eventually, modules and components are built up to (micro/macro)services, resulting in fully-fledged systems.

At every level, we forfeit details. The newly formed aggregate hides information about how it implements its promises, which isn’t relevant for those consuming them. It is crucial to design the appropriate APIs (aka, boundaries) and restrict premature abstractions with incomplete information (see Rule of Three).

Example 2 – Messages

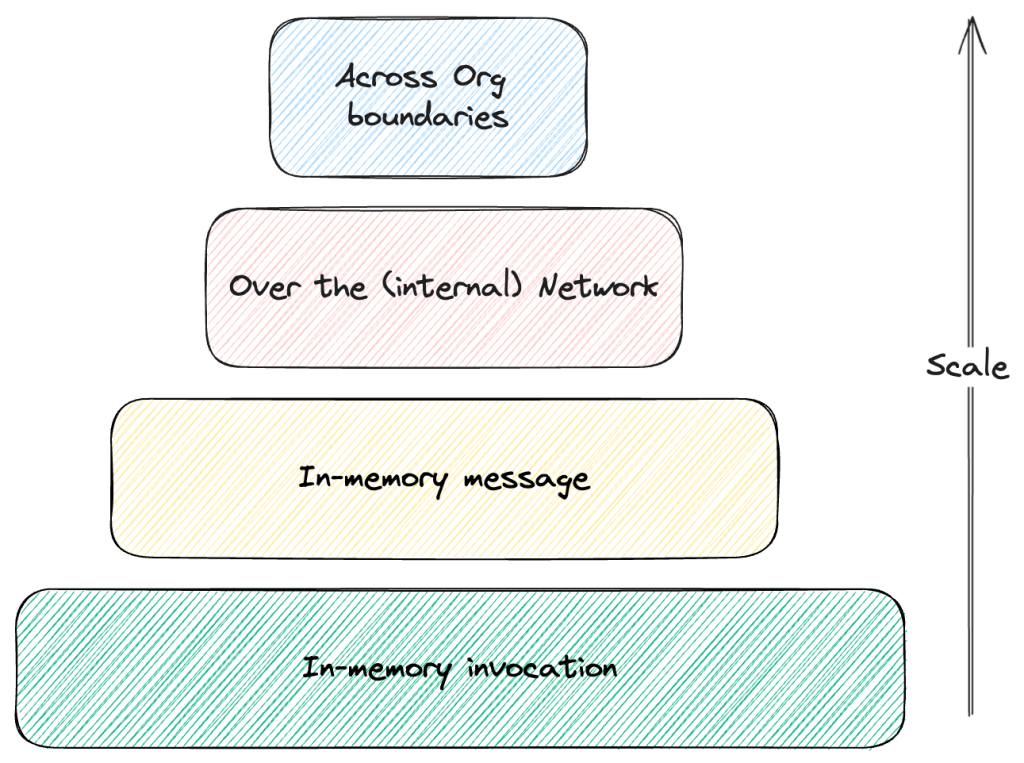

It is all good to package your code with the appropriate boundaries and promises, but it will only be helpful if someone/something uses it. Users and machines decide to consume your promises, but they require a way to “exercise” them. Messaging happens again at all possible layers.

Clients and servers can be of any nature: at a low level, they can be two classes or functions. For example, one function A invokes another function B. B expects a given set of parameters, which A prepares and “sends” in its invocation. This is akin to a message from A to B, which waits for the result. The interaction can be async/sync, blocking or non-blocking; it doesn’t matter. The mechanics are the same.

Object orientation done right was all about message passing. Smalltalk, the OG object-oriented language, represented every interaction as a message sent to an object.

Components and modules rely on similar interactions. They can be as low level as between classes and functions (i.e., direct invocation through memory address) or more abstracted (like in-memory event buses like Spring Application Event).

Once we move further up and we need to cross the network to communicate, messages become more explicit. Events, commands, notifications, etc., represent messages that different code components/services exchange in a (not always) beautiful choreography to implement complex behaviours. In this layer, you find REST APIs with JSON payloads, gRPC (or, if you are unlucky enough, previous interactions like CORBA, DCOM, Java RMI, .NET Remoting, etc.), Kafka/Avro, etc.

At the top, we have systems interacting with other systems. It is the same metaphor (and, quite often, the same protocols and formats) but with reduced trust and a more straightforward boundary/interface.

Some components repeat themselves across all levels:

- Sender / Recipient information

- Body (parameters and values)

- Metadata (time reference, size, integrity checksums)

Example 3 – Load Distribution

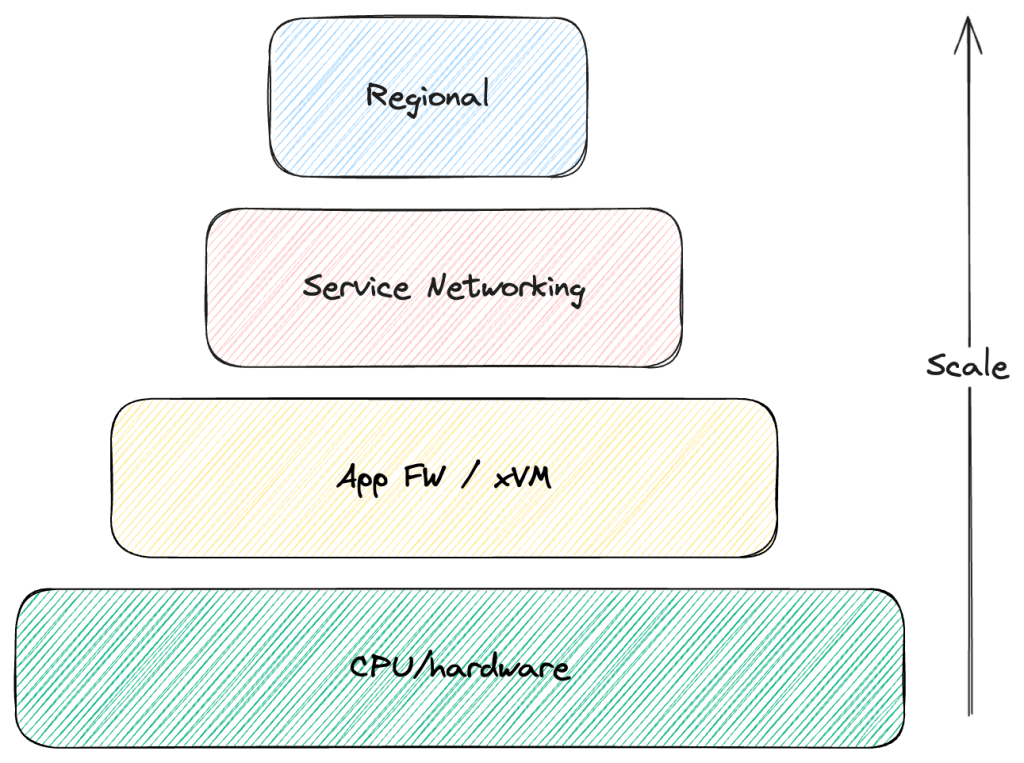

So far, we have behaviour represented as code and “communication intent” captured as messages. We now need to make sure those intents travel between code components. Enter “Load Distribution”.

At the lowest level, we have memory addresses (i.e., find my message at the beginning of this pointer and read consecutively) and the kernel scheduler assigning CPU time “equally”. This is both a way to distribute the message representing the intent and implement a form of load balancing since multiple code components “compete” for scarce resources. These components “wait” until resources are assigned; in other words, they queue. We scale by throwing more CPU cores to every problem (if we are lucky enough to have a parallelisable problem).

Moving up the stack, we find thread pools and managers that aim to multiplex the underlying physical (or virtual) CPUs as exposed by the OS. It is the same premise but applied a a higher level of abstraction with added support from the tooling. We start dealing with various types of explicit (in memory) queues and other synchronisation objects.

Things become more interesting when we get to the service level and the network is involved. Addresses move from memory to explicit (i.e., IP protocol), Load Balancers become network appliances, and where we once threw CPU cores at problems, we now throw instances/containers (if we are lucky enough to have a share-nothing architecture).

At the top level, we start thinking of distributing load across (cloud provider) Regions. While uncommon from a load perspective (most companies don’t need this), it can be required for availability/redundancy purposes. At this point, we have zoomed out so much that the whole system deployed to a set of Availability Zones in a single Region is just a node in the graph.

Why does this all matter?

I believe all of this matters because, when problems, patterns and structures repeat at a certain level, solutions become reusable.

For example, our mental model should not be different when we test a class/function or a whole service. What differs is “how” we apply that mental model.

| Aspect | Class/Function | Service |

| Boundary | Method/Function signature | Public API |

| Arrange | Prepare input data Set dependency expectations (e.g., mocks, stubs) Inject dependencies (other classes) | Prepare input message Roll out infrastructure (database, message bus) Prepare required state (e.g., preload database) |

| Act | Invoke method/function | Send “message” to public API |

| Assert | Await results Validate returned data Verify dependency expectations | Await results Validate response message Validate state mutations |

This is just one of many examples where, while the specific change, the mental model remains the same. Other examples include:

- (Some) SOLID principles apply at multiple levels

- Both SRP and IS are about granularity, which leads to Microservices architecture.

- Extending is always safer than modifying, whatever your boundary.

- The Inversion of Control that DI seeks can be achieved at service-level injecting client libraries that decouple actual service implementation(s).

- Data structures showing up at multiple levels

- Hashmaps (along with lists) are the most common data structure in software engineering (every JavaScript object is basically one).

- Key Value databases are wildly popular and pretty much the same thing, at a different scale.

- Contracts as a way to enforce promises

- At the language level, we have method signatures with types (if you are using statically typed languages).

- At the system level, we have attempts like Protobuf, Avro, JSON Schema and the whole “Data Contracts” movement to replicate.

If this rings true, then we should focus more on the rules that handle these commonalities and less on the specifics. If we see these patterns emerging, solutions that we know well in one scale can apply to other scales, just like your knowledge about SQL helps you with multiple databases or your mastery of Java translates (to a degree) to Kotlin or C#.

Summary

This metaphor needs to be more consistent (i.e., there will be many cases where it doesn’t apply) and complete (i.e., I’d need to dedicate the rest of my career to finding all the missing examples).

However, I believe once you start “seeing it”, it cannot be “unseen”. You will find more and more cases where your knowledge reapplies and there is a feeling of familiarity.

Some might call it “common sense,” but I prefer to think of it as more elegant than that.